Verilog is the most commonly used language for hardware design in America (VHDL is more common in Europe). Too bad it's so baroque. If you ever browse the Verilog questions on Stack Overflow, you'll find a large number of questions, usually downvoted, asking “why doesn't my code work?”, with code that's not just a little off, but completely wrong.

Lets look at an example: “Idea is to store value of counter at the time of reset . . . I get DRC violations and the memory, bufreadaddr, bufreadval are all optimized out.”

always @(negedge reset or posedge clk) begin

if (reset == 0) begin

d_out <= 16'h0000;

d_out_mem[resetcount] <= d_out;

laststoredvalue <= d_out;

end else begin

d_out <= d_out + 1'b1;

end

end

always @(bufreadaddr)

bufreadval = d_out_mem[bufreadaddr];

We want a counter that keeps track of how many cycles it's been since reset, and we want to store that value in an array-like structure that's indexed by resetcount. If you've read a bit on semantics of Verilog, this is a perfectly natural way to solve the problem. Our poster knows enough about Verilog to use ‘<=' in state elements, so that all of the elements are updated at the same time. Every time there's a clock edge, we'll increment d_out. When reset is 0, we'll store that value and reset d_out. What could possibly go wrong?

The problem is that Verilog was originally designed as a language to describe simulations, so it has constructs to describe arbitrary interactions between events. When X transitions from 0 to 1, do Y. Great! Sounds easy enough. But then someone had the bright idea of using Verilog to represent hardware. The vast majority of statements you could write down don't translate into any meaningful hardware. Your synthesis tool, which translates from Verilog to hardware will helpfully pattern match to the closest available thing, or produce nothing, if you write down something untranslatable. If you're lucky, you might get some warnings.

Looking at the code above, the synthesis tool will see that there's something called dout which should be a clocked element that's set to something when it shouldn't be reset, and is otherwise asynchronously reset. That's a legit hardware construct, so it will produce an N-bit flip-flop and some logic to make it a counter that gets reset to 0. BTW, this paragraph used to contain a link to http://en.wikipedia.org/wiki/Flip-flop(electronics), but ever since I switched to Hugo, my links to URLs with parens in them are broken, so maybe try copy+pasting that URL into your browser window if you want know what a flip-flop is.

Now, what about the value we're supposed to store on reset? Well, the synthesis tool will see that it's inside a block that's clocked. But it's not supposed to do anything when the clock is active; only when reset is asserted. That's pretty unusual. What's going to happen? Well, that depends on which version of which synthesis tool you're using, and how the programmers of that tool decided to implement undefined behavior.

And then there's the block that's supposed to read out the stored value. It looks like the intent is to create a 64:1 MUX. Putting aside the cycle time issues you'll get with such a wide MUX, the block isn't clocked, so the synthesis tool will have to infer some sort of combinational logic. But, the output is only supposed to change if bufreadaddr changes, and not if d_out_mem changes. It's quite easy to describe that in our simulation language, the but the synthesis tool is going to produce something that is definitely not what the user wants here. Not to mention that laststoredvalue isn't meaningfully connected to bufreadvalue.

How is it possible that a reasonable description of something in Verilog turns into something completely wrong in hardware? You can think of hardware as some state, with pure functions connecting the state elements. This makes it natural to think about modeling hardware in a functional programming language. Another natural way to think about it would be with OO. Classes describe how the hardware works. Instances of the class are actual hardware that will get put onto the chip. Yet another natural way to describe things would be declaratively, where you write down constraints the hardware must obey, and the synthesis tool outputs something that meets those constraints.

Verilog does none of these things. To write Verilog that will produce correct hardware, you have to first picture the hardware you want to produce. Then, you have to figure out how to describe that in this weird C-like simulation language. That will then get synthesized into something like what you were imaging in the first step.

As a software engineer, how would you feel if 99% of valid Java code ended up being translated to something that produced random results, even though tests pass on the untranslated Java code? And, by the way, to run tests on the translated Java code you have to go through a multi-day long compilation process, after which your tests will run 200 million times slower than code runs in production. If you're thinking of testing on some sandboxed production machines, sure, go ahead, but it costs 8 figures to push something to any number of your production machines, and it takes 3 months. But, don't worry, you can run the untranslated code only 2 million times slower than in production . People used to statically typed languages often complain that you get run-time errors about things that would be trivial to statically check in a language with stronger types. We hardware folks are so used to the vast majority of legal Verilog constructs producing unsynthesizable garbage that we don't find it the least bit surprising that you not only do you not get compile-time errors, you don't even get run-time errors, from writing naive Verilog code.

Old school hardware engineers will tell you that it's fine. It's fine that the language is so counter-intuitive that almost all people who initially approach Verilog write code that's not just wrong but nonsensical. "All you have to do is figure out the design and then translate it to Verilog". They'll tell you that it's totally fine that the mental model you have of what's going on is basically unrelated to the constructs the language provides, and that they never make errors now that they're experienced, much like some experienced C programmers will erronously tell you that they never have security related buffer overflows or double frees or memory leaks now that they're experienced. It reminds me of talking to assembly programmers who tell me that assembly is as productive as a high level language once you get your functions written. Programmers who haven't talked to old school assembly programmers will think I'm making that up, but I know a number of people who still maintain that assembly is as productive as any high level langauge out there. But people like that are rare and becoming rarer. With hardware, we train up a new generation of people who think that Verilog is as productive as any language could be every few years!

I won't even get into how Verilog is so inexpressive that many companies use an ad hoc tool to embed a scripting language in Verilog or generate Verilog from a scripting language.

There have been a number of attempts to do better than jamming an ad hoc scripting language into Verilog, but they've all fizzled out. As a functional language that's easy to add syntax to, Haskell is a natural choice for Verilog code generation; it spawned ForSyDe, Hydra, Lava, HHDL, and Bluespec. But adoption of ForSyDe, Hydra, Lava, and HHDL is pretty much zero, not because of deficiencies in the language, but because it's politically difficult to get people to use a Haskell based language. Bluespec has done better, but they've done it by making their language look C-like, scrapping the original Haskell syntax and introducing Bluespec SystemVerilog and Bluespec SystemC. The aversion to Haskell is so severe that when we discussed a hardware style at my new gig, one person suggested banning any Haskell based solution, even though Bluespec has been used to good effect in a couple projects within the company.

Scala based solutions look more promising, not for any technical reason, but because Scala is less scary. Scala has managed to bring the modern world (in terms of type systems) to more programmers than ML, Ocaml, Haskell, Agda, etc., combined. Perhaps the same will be true in the hardware world. Chisel is interesting. Like Bluespec, it simulates much more quickly than Verilog, and unsynthesizable representations are syntax errors. It's not as high level, but it's the only hardware description language with a modern type system that I've been able to discuss with hardware folks without people objecting that Haskell is a bad idea.

Commercial vendors are mostly moving in the other direction because C-like languages make people feel all warm and fuzzy. A number of them are pushing high-level hardware synthesis from SystemC, or even straight C or C++. These solutions are also politically difficult to sell, but this time it's the history of the industry, and not the language. Vendors pushing high-level synthesis have a decades long track record of overpromising and underdelivering. I've lost track of the number of times I've heard people dismiss modern offerings with “Why should we believe that this they're for real this time?”

What's the future? Locally, I've managed to convince a couple of people on my team that Chisel is worth looking at. At the moment, none of the Haskell based solutions are even on the table. I'm open to suggestions.

CPU internals series

P.S. Dear hardware folks, sorry for oversimplifying so much. I started writing footnotes explaining everything I was glossing over until I realized that my footnotes were longer than the post. The culled footnotes may make it into their own blog posts some day. A very long footnote that I'll briefly summarize is that semantically correct Verilog simulation is inherently slower than something like Bluespec or Chisel because of the complications involved with the event model. EDA vendors have managed to get decent performance out of Verilog, but only by hiring large teams of the best simulation people in the world to hammer at the problem, the same way JavaScript is fast not because of any property of the language, but because there are amazing people working on the VM. It should tell you something when a tiny team working on a shoestring grant-funded budget can produce a language and simulation infrastructure that smokes existing tools.

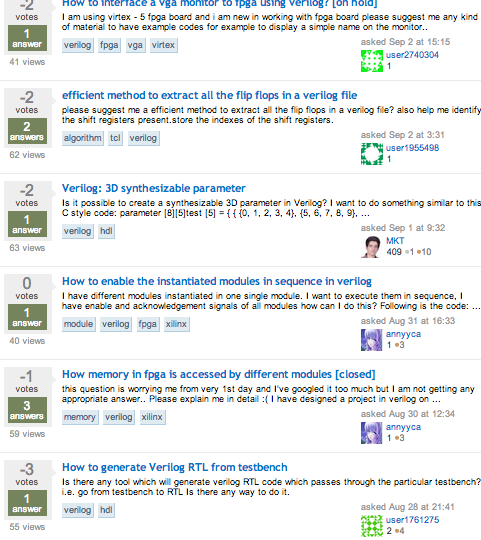

You may wonder why I didn't mention linters. They're a great idea and for reasons I don't understand, two of the three companies I've done hardware development for haven't used linters. If you ask around, everyone will agree that they're a good idea, but even though a linter will run in the thousands to tens of thousands of dollars range, and engineers run in hundreds of thousands of dollars range, it hasn't been politically possible to get a linter even on multi-person teams that have access to tools that cost tens or hundreds of thousands of dollars per license per year. Even though linters are a no-brainer, companies that spend millions to tens of millions a year on hardware development often don't use them, and good SystemVerilog linters are all out of the price range of the people who are asking StackOverflow questions that get downvoted to oblivion.